Marcelo V. Wust Zibetti, PhD, is an assistant professor of radiology at NYU Grossman School of Medicine and scientist at the Center for Advanced Imaging Innovation and Research. His investigations explore new acquisition and reconstruction techniques in magnetic resonance imaging (MRI), with the aim of making MRI methods more efficient. Together with Ravinder Regatte, PhD, professor of radiology and orthopedic surgery at NYU Grossman, Dr. Zibetti leads research on a data-driven learning framework for fast, quantitative mapping of the knee, a project supported by the National Institute of Arthritis and Musculoskeletal and Skin Diseases. Our conversation was edited for clarity and length.

What would you say is the focus of your research, in general terms?

My research is essentially about the process that begins with data acquisition and ends with image reconstruction in MRI. I started with optical images for cameras, later moved on to computer tomography, and then MRI. I have always liked the link between what a machine acquires and the process that gives us humans something meaningful, like an image, a volume, or a measurement.

In MRI, we go from radio waves to information that can be visualized in various ways but doesn’t necessarily have to be visualized at all. Is there a part of the imaging process you’re most interested in as a researcher?

What I like in MRI is that there’s a whole world of possibilities to work with. There’s not one tool that solves everything, and what I value the most are unique approaches that sometimes come from unusual places. Working with acquisition and reconstruction forces me to look at problems from new perspectives.

In your work you look for ways to make acquisition and reconstruction of MR data and images more efficient. Does more efficient just mean faster?

Not necessarily. Efficiency also means you’re not wasting time with things that don’t matter. For example, looking at the same thing again from the same perspective is useful in very noisy situations, because noise is constantly changing—so that’s a bit like trying to see something in the dark. But in better conditions, what typically gives you more information is looking from different perspectives. Developing acquisitions that do this has historically relied on mathematical models that are improved over time, but now there are machine learning approaches that can help us investigate other possibilities.

Do you mean that machine learning can provide clues to new formal solutions?

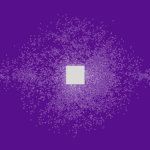

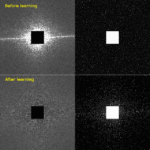

In some sense, yes. MRI has been improved in large part through trial and error: we try something new, and if it’s better, we try to understand why, and then build on that. But now we’re at the point of having very rich models. So, why not give them to machines to do trial and error in silico? Machine learning can now test more scenarios in the blink of an eye than we ever could at the scanner, and it may surprise you with a solution you’d never think of. That’s what we’re doing now, and what we’re seeing is fascinating.

Can you talk about any surprises that you have discovered in the course of your research?

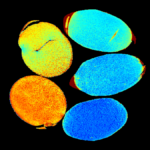

One surprise is how closely what a machine learns aligns with what humans have learned. When we were first trying to apply machine learning to learn the sampling pattern in Cartesian acquisitions, we saw that it learned in just a couple of minutes what we humans have learned over decades—things like complex conjugate symmetry of k-space, random sampling and variable distribution, spacing between samples typical of parallel MRI… Something I’d like to investigate more is what else has the machine learned but maybe we have not?

In MRI, as in any domain, the more advances have been made, the harder it is to meaningfully improve. You’re in the business of looking at algorithms and searching for greater efficiency. In some of your work, the gains are as high as fifty percent. But do you have a sense of where MRI’s efficiency limit may be?

That’s very difficult to predict. There is of course a limit, but it’s a soft limit. As you accelerate, you keep losing information. At some point things cross from still good to no longer good. Where that point is depends on personal perspective, application, on what you think is necessary, so it’s a complicated issue.

Right now, one of the best tools for accelerating MRI is deep learning. With deep learning, compressibility becomes something of a manifold, some people think, an arbitrary representation that we cannot even imagine but where things are somehow highly compressible. But it’s not just this—if you can make improvements at every step of the acquisition and reconstruction process, in the end what you gain can definitely be surprising. It’s also possible that someone will come up with new kinds of hardware like different coils, or even coils on the periphery or outside the MRI scanner. That would go to the idea of seeing things with multiple eyes from different perspectives. The point is, we just don’t know.

You mentioned more and more abstract representational domains that may be hard to imagine. What tools do you use to explore new algorithmic possibilities or the potential for increased compressibility? How do you look for those things?

I’ve always liked talking to people from different tribes: physicians, physicists, engineers, artists, people with different backgrounds, because they have different perspectives and completely different solutions for the same problems. There are so many possible models for representing things. Most of the models used in MRI were first developed by mathematicians, but sometimes these models are quite abstract. Sparsity is an example, and I think now there’s some intuition about it because people have found ways to apply it. But some models are not easy to exemplify. So, I’m not sure how to answer except by saying that I’m aware that increased complexity may turn out to be useful in solving some problems we still have.

When you publish a new algorithm and share it with the research community, do people engage with that work?

Not always. Sometimes new ideas are hard to comprehend and to explain. And the harder something is to comprehend, the easier it is to discard. I also think there’s a lot of power in trends and you may have a wonderful solution but the prevailing trend can be so loud that it is difficult for people to hear you.

Is there an example you have in mind?

One example is where we learned a sampling pattern using evolutionary learning. This approach is designed to work with discrete problems, and here we had a discrete problem—and that’s why the solution was so efficient.

You’re talking about bias-accelerated subset selection.

Yes. It’s been a couple of years now since the paper was published, and some people cite it but nobody else has tried to build on it. At the same time, I see a lot of attention to deep learning approaches that are not as good for these problems. So I’m thinking, why is that? And it may be because deep learning is such a strong trend.

Can you briefly explain the principle behind evolutionary learning?

This is an area of optimization useful in combinatorial problems. In simple terms, you change things and if the changes work better, you keep them; if worse, you ignore them. Just as deep learning is inspired by neuronal networks, evolutionary learning is inspired by natural selection.

Are there any examples you’d like to share of how different perspectives or ideas from different tribes have benefited your work?

When I was working on my PhD in super-resolution for optical imaging, I was in the field of electrical engineering. At some point, I figured out that the tools we had were not adequate to the problem I was dealing with, and I found some literature that started moving me toward other fields like applied mathematics and mechanical engineering. I started noticing how the language and applications were different but the underlying mathematical model was similar. And I began to see that incremental developments in one discipline, when combined with those from another discipline, could provide a better overall picture.

Seeing something you were very familiar with in a new, revealing light is a big part of the challenge of remaining productive as a researcher, especially as you progress in your specialized area, right?

What happens is that it’s harder to see what is new and what is just a reinvention of something already existing. One of the things I most enjoy about learning from other disciplines is that there’s so much there waiting to be noticed.

You see it as a question of finding the right idea and matching it to the right problem.

Exactly. I think we have a natural tendency toward the new, and novelty always lends some shine, but there is so much out there already that we shouldn’t’ close our eyes to.

What led you to MRI?

After my PhD, I went on to a postdoc with Alvaro De Pierro, a mathematician who is an expert in computed tomography. One of the nicest things he did was, he wanted to learn MRI and he said to me, why don’t you learn and teach me? And he gave me a book. I read it, gave some lectures to the team, and once they thought they understood enough, we went and talked to an MRI lab—like ours, but much smaller, at UNICAMP in Brazil—and said, hey, we have some ideas and would like to collaborate.

You learned MRI from a book, taught it to a research team that specialized in CT, and then the team just approached an MRI lab to start a collaboration?

Yes, and the MRI team—they were doing functional MRI—had several EPI datasets from dozens of patients with some artifacts that they hadn’t been able to correct. And because of the artifacts, they could not publish their findings. They gave us some samples to study, and of course I was like, hey, it’s in the book: page 210, EPI, ghosting artifact. We did a literature review and found a couple of suggestions to fix it, and based on that, with some modifications, we cleaned up a year’s worth of scan data.

So, you’re not just interested in fundamental ways of thinking but are also able to learn them. That’s not something everyone can do.

Well, I was lucky to learn enough about MRI to enable me to talk to MRI people and understand the problem they had. If you asked me right now how much I knew back then, it was maybe one percent of what I know today. But I was lucky, because that one percent was exactly what I needed to communicate about and solve the given problem.

What brought you to New York?

My wife had a work opportunity here and I decided to take a sabbatical from my job as professor in Brazil. Alvaro, who had advised my postdoctoral research, introduced me to Gabor Herman who is an expert in CT at the City University of New York and a former advisor of Alvaro’s. So, we wrote a project, I requested leave, and that later led to a connection with NYU Langone.

Can you talk about how that connection came about?

When I mentioned to Gabor that I had worked with Alvaro on MRI, Gabor said that he had a good friend at NYU Langone who works on MRI, and made an introduction. One thing led to another. A team at NYU Langone was developing a GRASP technique and they were seeing some unusual artifacts. We talked, they gave me some data, I played with it and I noticed that their artifacts were of the same kind as in my super-resolution thesis—an unusual problem to which I had a perhaps unusual solution. They were very surprised because they had been trying for some time to solve this issue. But I saw it quickly because I had encountered it before in a very different field.

This goes to not inventing a new tool but finding and adapting an existing tool that fits a particular need.

Exactly. And the key to that are diverse perspectives.

And how did that connection lead to your joining our imaging research center?

In 2016, I had the opportunity to join an investigation on accelerating T1ρ led by Ravi Regatte. Ravi had a new grant and needed a postdoc. At the time there wasn’t a lot of work on acceleration for T1ρ, so I was excited about the possibilities. In fact, the first thing we did was write a compressive sensing algorithm and compare twelve existing approaches, because there’s no way to tell what’s better unless you try—not everything, because that’s impossible, but a lot.

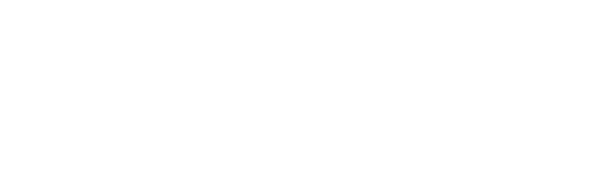

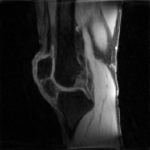

Why is T1ρ an interesting type of contrast?

T1ρ is nice because you don’t need any injectable contrast. The contrast is produced by manipulating the magnetic field, and it’s essentially something between T1 and T2 where the relaxation is more sensitive to water bound to macromolecules, which include proteins. So, it gives a touch of molecular imaging to MRI, which is useful in several applications.

And the applications that you were working on then were primarily focused on cartilage imaging in the knee.

Yes, in the cartilage you have proteoglycans, which are macromolecules, and in diseases like osteoarthritis one of the first things that people think happens is you start losing those macromolecules. T1ρ gives you a way to probe whether someone may be developing osteoarthritis before you actually see overt signs of degradation.

Your academic career has taken you through so many fields. Do you feel like MRI is going to hold your interest?

Definitely. MRI is a very flexible machine, and there is so much we are still discovering. We also have to improve much of what we have in order to make it more economically feasible. Many of the research sequences that allow us to see new things are not practical in clinical use, but maybe with more work we can make them practical. For someone like me, who likes a diversity of problems, this is the perfect situation.

You grew up in Brazil, and did your undergraduate, graduate, and postdoctoral training there. What was it like for you to then live in a different culture and work in a different research culture?

The beginning was not easy. There was a lot to consider—not only work but also family. It’s a different country, different food, different tastes, and people may see you differently, maybe as a stranger. But I think in life it’s the same as in science: you become a part of a different tribe and find a common ground. We came thinking we would go back to Brazil after two years, but when I started at NYU Langone, I thought it may be a unique opportunity, so I extended my leave for another two years. My wife and daughter were very happy about the extension, and after a while I realized that there was no reason to go back. Leaving a career as a professor in Brazil was a leap of faith, but I was so much happier here that it didn’t matter to me whether I would ever become a professor again. I knew that I’d be happy doing what I do. The environment here at NYU Langone and all the resources, the people, the things you can learn—for someone like me, it’s a dream.

Related Resources

MATLAB scripts for learned pulse sequence parameters of magnetization-prepared gradient echo sequences used in multi-component T2 and T1ρ mapping.

MATLAB scripts for data-driven optimization of magnetization-prepared gradient echo sequences used in T1ρ mapping.

Simultaneous machine learning optimization of parallel MRI sampling pattern and variational-network image reconstruction parameters.

Machine learning optimization of k-space sampling for accelerated MRI.

An accurate, rapidly converging, low-compute algorithm for approximating solutions to the least absolute shrinkage and selection operator (LASSO) problem.