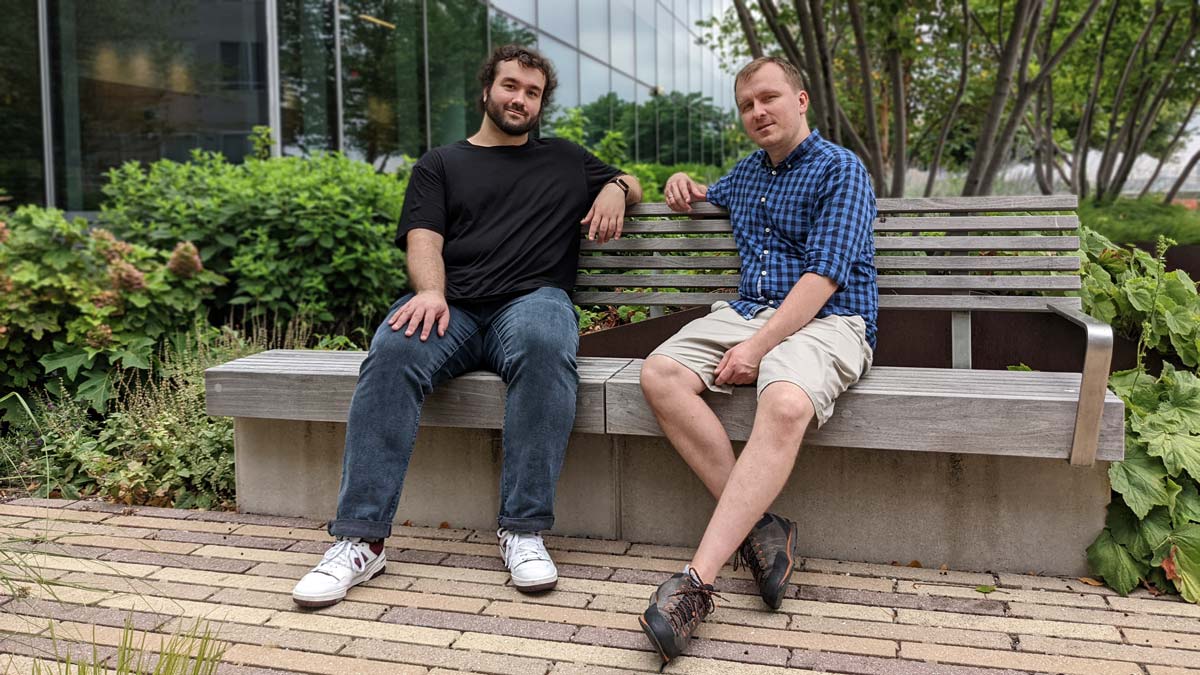

Krzysztof Geras, PhD, assistant professor of radiology at NYU Grossman School of Medicine, leads a research group focused on machine learning applications to breast cancer screening. Jan Witowski, MD, PhD, postdoctoral fellow working with Dr. Geras, is the lead author of the group’s new study describing an AI system for detecting breast cancer in dynamic contrast-enhanced MRI.

In a paper published on September 28 in Science Translational Medicine the authors find that their machine learning model, if used to assist radiologists, has the potential to significantly reduce unnecessary biopsy referrals.

The findings echo prior work the team has done on a range of medical imaging modalities, including mammography, digital breast tomosynthesis, and ultrasound. We asked Dr. Geras and Dr. Witowski how their latest research fits in with a bigger vision for AI in diagnosing breast cancer. Our conversation was edited for clarity and concision.

Can you give us a synopsis of your latest research study?

Jan Witowski: We just published a study that describes an AI-based system we developed to predict whether an MRI exam contains breast cancer.

This is a large study, very thoroughly evaluated on patients at NYU Langone and other hospitals in the U.S. and Europe. First, we show that this system is just as good as radiologists in detecting breast cancer in MRI exams. Second, we show in a simulation that using this system may help avoid unnecessary biopsies.

Krzysztof, in a study published in 2019 you and colleagues found that neural networks looking for cancer in mammograms were as accurate as human radiologists, and that in combination the human and machine readings were even more accurate than either the AI or human experts on their own. Since then, you and colleagues have made similar findings in ultrasound, and now in MRI. What are these findings adding up to?

Krzysztof Geras: This is a part of a bigger research program that we’ve been working on for a few years now, which is going to culminate in neural networks that learn simultaneously from multiple imaging modalities. The steps that you enumerated—mammography, ultrasound, MRI—are parts of this bigger idea.

What we’re hoping to do is combine our base neural networks that use individual image modalities and make predictions based on information synthesized from these component networks. We’re hoping to find very subtle correlations among different imaging modalities and learn signal that is too weak for humans notice.

Are you envisioning a modality-agnostic AI?

K.G.: Not really. Modality agnosticism may be a byproduct of our research, but what we’re building is a neural network capable of synthesizing information from all available types of images.

Let’s go back to the idea of machine learning algorithms and people working together. Linda Moy, MD, one of your frequent coauthors, recently referred to this phenomenon as “collective intelligence,” meaning that AI and radiologists complement each other by learning different features. However, humans, unlike machines, can be asked to explain their reasoning. How do you think about explainability on the machine side of this collective intelligence?

K.G.: In the context of classification of imaging exams as normal or containing malignancy, explainability is relatively simple. We can say that a neural network is explainable if it points to the part of the image that indicates malignancy. Whether that location is correct can be verified based on retrospective data, such as biopsy results, with human help.

As we move forward, I expect neural networks to make much more interesting predictions than just whether there’s a malignancy in a certain place. For example, neural networks may predict not just whether a person is healthy at the moment but whether a person will be healthy in a couple of years. Explainability is much more of a challenge there, because it’s hard to say what it would actually mean. That’s something that the machine learning community will definitely have to tackle.

J.W.: I think that explainability is often overrated. People believe that they know how to explain their own predictions, but there’s recent research that shows we’re not as good at that as we think. Humans still rely a lot on intuition and experience.

Today, a radiologist leans over to a colleague and asks, ‘What do you think about this case?’ And the colleague may say, ‘yeah, this looks suspicious—let’s ask for a biopsy.’ This is not very different than working with an AI system would be. The AI is basically a new colleague highlighting suspicious cases.

Different ways of imaging breasts—mammography, digital breast tomosynthesis, ultrasound, MRI—involve different kinds of sensing and different types of data. How do you deal with this technical variety, and what challenges does it present in building AI models for cancer detection?

K.G.: In each of these cases the images have different characteristics. For example, ultrasound images are usually very small but there are very many of them—there could be a hundred images in one ultrasound exam. In digital breast tomosynthesis, there are just a few images but each of them is really massive—about 1,000 by 1,000 pixels and potentially as many as 100 layers. So the neural networks just have to work differently. They’re built on the same general principles but don’t have the same architectures.

What is working with these different types of images teaching you about machine learning?

K.G.: I wouldn’t say necessarily that these projects teach us about machine learning, but they inspire us to think about machine learning problems that become very apparent in these applications. So, for example, learning with a lot of noise, learning from very large images, learning from a very large collection of small images, multimodal learning, learning with weak supervision—there’s a wide variety of problems that are not completely unique to breast cancer applications, but here the need so solve them becomes very clear.

So, the types of technical challenges you’re facing are representative of the technology you’re working with—is that what you mean?

K.G.: Let me put it a little differently. One way to generate new machine learning research is to read a lot of conference papers and think, ‘this paper does x, that paper does y—how about we do x + y?’ But it’s very difficult to have any impact with this approach, because that’s thinking in terms of mixing available technologies to create something novel but without the context of where the result will be applied.

Working on a specific application forces you to look hard at your data, take existing techniques, and build the strongest model you possibly can. Once that is done—which is frequently pretty difficult—you have a chance to create a new technology that makes a difference. That’s the way to make lasting contributions.

If you manage to create something unique and useful, you can abstract a bit from the application and think about how it fits more generally in the machine learning framework.

J.W.: If you take a method that’s not designed for medical data, it might not work out of the box—you have to tweak it.

K.G.: Tweaking existing methods is one thing—and doing this intelligently can be challenging enough—but what we should really strive for is designing new methods that work well for medical applications and then also building generic forms with potential uses outside of medicine.

J.W.: Sometimes when we talk about a problem, I tell Krzysztof, ‘Oh, it would be great to do this or that,’ and Krzysztof says, ‘Yeah, there’s a machine learning method that can do that.’ Often methods already exist that don’t have great applications in non-medical data. So, when you see a particular problem in medical data, there’s an opportunity to connect the dots and get inspired.

The field of machine learning is so active, with the number of novel techniques increasing very fast. How do you stay abreast of new advances?

K.G.: That is very difficult to do. The field has grown so much that some branches—like reinforcement learning, for example—have become separate fields in their own right.

First, I read a lot more papers and follow much more closely certain subfields of machine learning that are particularly interesting to me. Second, when I have an idea that falls a little further from the core of my interest, I speak to colleagues and we work out whether this idea has been done.

Then there are things we all do, like looking at papers from machine learning conferences and following our favorite scientists on Twitter, but of course this is not enough to know everything—that has become impossible.

Jan, you’re a physician by training. What is it like for you to be developing expertise in an area of computer science?

J.W.: Halfway though med school, I started working on a project with neurosurgeons who were looking for ways to visualize liver anatomy in order to prepare for complex surgeries. We experimented with 3D printing and 3D rendering software, and that’s how I started programming and learning about computer science. When I was getting into AI, I did a mini proof of concept for detecting polyps in colonoscopy. So, I’ve always tried to be somewhere there on the edge between computer science and medicine.

I’m definitely not as great a physician as full-time physicians and not as great a computer scientist as full-time computer scientists. But because I’m somewhere in between, I think that allows me to understand medical data a little bit better than a computer science expert would and have some sort of intuition to judge what’s interesting for clinical applications a little easier than would people who haven’t worked with medical data.

Obviously, I can’t do cutting edge computer science research on my own, so it’s really helpful to have a team full of people who are great at that.

K.G.: There is tremendous value in having experts from different fields on one team working very closely. Just as Jan, working by himself, would not submit a paper to a machine learning conference, I would not submit a paper to a medical journal. But if we have two experts in two fields, then we can do super high-quality work between those fields.

It’s an example of two types of expertise coming together to produce something greater than the sum of its parts.

K.G.: That is really necessary if you want to do very high-quality work. And doing anything less is, I think, just not worth the effort.

Does it strike you that the findings of your research show a similar phenomenon? One in which two different types of expertise (human and machine) come together to produce results that surpass what either can deliver on its own?

K.G.: This phenomenon can be observed across many fields. In machine learning it’s called model ensembling. What model ensembling says is that if you have two models, each strong but based on a different principle, then if you combine their predictions, their quality will be higher than that of each model’s separately.

It doesn’t surprise me at all that human-machine collaboration shows the same properties. It’s a clear, special case of model ensembling. I think it is surprising to medical doctors who are not familiar with this concept but most computer scientists who have done a basic course in machine learning would find it unremarkable.

In general, the way we are trained as scientists at universities is quite limited. We are typically trained to be experts in a certain field, but the world doesn’t care about divisions between disciplines. So it’s pretty natural that in order to do top quality research you have to mix expertise from various fields.

One of the resources that your research depends on is high-volume, high-quality medical image data, often linked to clinical information. How did you develop the kind of access you need both within NYU Langone and at other institutions?

K.G.: When I started here as assistant professor, I initiated collaborations with radiologists in the breast imaging section, and we created IRB protocols together to have access to data.

Of course, building a dataset in reality is not like building a dataset in an academic machine learning exercise. We’ve been assembling and expanding our data for several years now, and as we’ve done that we’ve also deepened our understanding of the dataset. Over time, we’ve been constructing new versions with more examples and with historical clinical information. It’s very difficult to do this in any way other than by iteratively trying to understand the data and asking for more relevant details that might be available.

In your new study on machine learning for breast cancer detection in MRI, you and coauthors also use a dataset from the Jagiellonian University in Krakow, Poland. In prior work on ultrasound, you and colleagues used data from the Hospital for Early Detection and Treatment of Women’s Cancer in Cairo, Egypt. Can you talk about the role external sources of data play in your research, why they’re important, and how you seek them out?

J.W.: We heavily rely on external datasets in our analyses. When you publish research in machine learning at a high level, practitioners—doctors, researchers—expect you to prove that your model works not just on the data of the same type and from the same population as those you developed the model on but also on other populations.

This is one of the reasons we try to use datasets from all over the U.S., Europe, and elsewhere. Sometimes those datasets are available publicly—that’s a big gift to any researcher. In our latest study, we used two public datasets, but we always also look for collaborators. People in the machine learning community understand that researchers love data. Sometimes, when you talk to colleagues from other institutions, one of the first questions you ask is ‘Can we evaluate our model on your data?’ or ‘Can you evaluate your model on our data?’

In the case of Poland, we show that our model is as good as the radiologists interpreting MRI exams on the Polish dataset, too. We use all of the MRIs performed at Jagiellonian University Hospital between 2019 and 2021, so we are pretty comprehensive there.

K.G.: External data is very important because it often allows you to test not just whether your model is robust to images that look slightly different but also whether it’s robust to a different method of data collection.

People often misunderstand the ambiguity inherent in medical classification tasks. ‘Predict whether someone has cancer in mammography’ sounds very straightforward, but it can mean so many different things. It could mean detecting whether the person has cancer at the moment, whether the person has invasive cancer, whether the person has something suspicious that might be cancer. The differences between these questions sound subtle, but if datasets are collected differently, the differences could have a totally devastating effect on the performance of the model. So, those external validations serve as the final check of whether we actually did something valuable.

How would you describe your overarching research project? What is it that you’re trying to achieve and how far do you think you are from that goal?

K.G.: The big goal of our research group in terms of applications to breast imaging is building this multimodal neural network that learns from all available image types. We’re hoping that we will have a network with clearly superhuman performance and that we’ll be able to learn something new about breast cancer.

Superhuman performance would be a marked advancement over the kind of results you have been publishing.

K.G.: What we have right now are neural networks that are roughly on par with expert readers for each imaging modality. So, in those isolated experiments where we measured the ability of radiologists to identify cancer in different imaging exams, we found that for all of them AI was similar to the best readers we have. But humans have limited ability to fuse information from multiple types of images, so what we are hoping is that multimodal fusion, which is possible within neural networks, will give the model a boost, making it basically impossible for humans to compete with.

Of course, we don’t know at the moment whether that’s going to happen, but that’s the goal.

Related Stories

Yiqiu "Artie" Shen, machine learning researcher who develops artificial intelligence systems for medical imaging, talks about AI's ability to explain itself, guide discovery, and predict cancer risk.

A combination of radiologists and AI reduced overdiagnosis and more accurately identified breast cancer in ultrasound exams.

NYU Langone researchers won a deep learning challenge to detect lesions in digital breast tomosynthesis (DBT) images. We discuss the obstacles that DBT poses to deep learning and look at how our team navigated them.

Related Resource

Compare machine learning performance across classifiers and datasets.